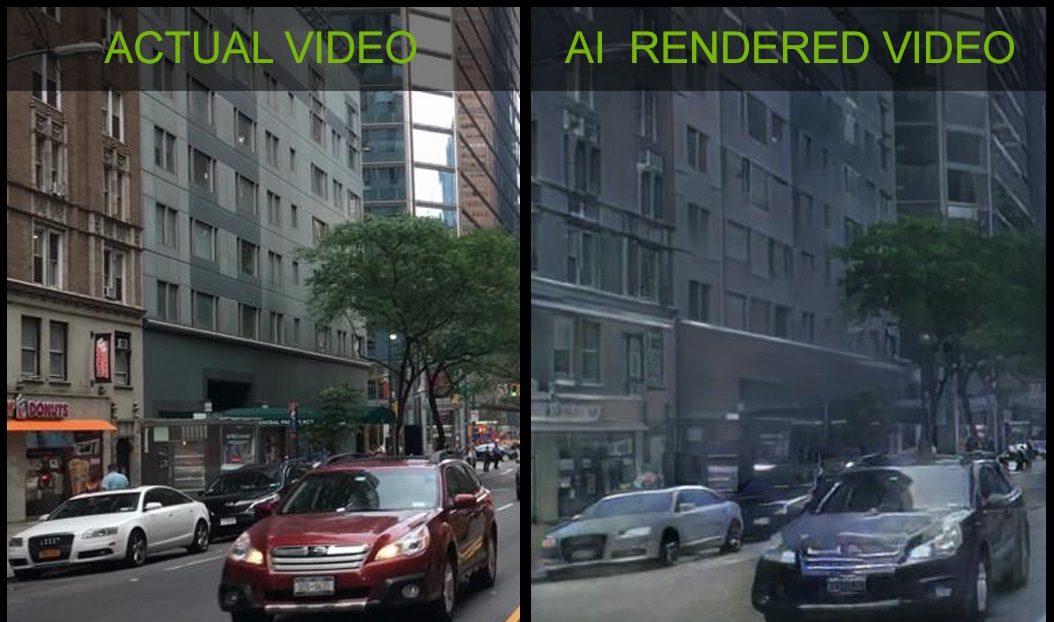

Nvidia has developed an AI which can turn real-life videos into 3D renders – making creating games and VR experiences simpler.

Creating 3D renders is a painstaking and time-consuming process requiring specific skills and can be incredibly costly. By reducing the barriers, Nvidia could enable more ideas to move from concept into reality.

During the NeurIPS AI conference in Montreal, Nvidia set up a dedicated area showing its technology. The company used its DGX-1 supercomputer for the demonstration so this isn’t achievable on your average household computer, at least for now.

The AI running on the DGX-1 took footage taken via a self-driving car’s dashcam, extracted a high-level semantics map using a neural network, and then used Unreal Engine 4 to generate the virtual world.

In the animation below, the top left image represents the input map. The bottom-right represents Nvidia’s video synthesis (vid2vid), while the others show competing approaches:

Beditor Catanzaro, VP of Applied Deep Learning Research at NVIDIA, said:

“NVIDIA has been inventing new ways to generate interactive graphics for 25 years, and this is the first time we can do so with a neural network.

Neural networks — specifically generative models — will change how graphics are created. This will enable developers to create new scenes at a fraction of the traditional cost.”

The result of the demo is a simple driving game that allows participants to navigate an urban scene.

“The capability to model and recreate the dynamics of our visual world is essential to building intelligent agents,” Nvidia’s researchers wrote in a paper. “Apart from purely scientific interests, learning to synthesize continuous visual experiences has a wide range of applications in computer vision, robotics, and computer graphics.”

“Nvidia’s AI can turn real-life videos into 3D renders”