Synthesized has launched a free tool which aims to quickly identify and remove dangerous biases in algorithms.

As humans, we all have biases. These biases, often unconsciously, end up in algorithms which are designed to be used across society.

In practice, this could mean anything from a news app serving more left-wing or right-wing content—through to facial recognition systems which flag some races and genders more than others.

A 2010 study (PDF) by researchers at NIST and the University of Texas found that algorithms designed and tested in East Asia are better at recognising East Asians, while those developed in Western countries are more accurate when detecting Caucasians.

Dr Nicolai Baldin, CEO and Founder of Synthesized, said:

“The reputational risk of all organisations is under threat due to biased data and we’ve seen this will no longer be tolerated at any level. It’s a burning priority now and must be dealt with as a matter of urgency, both from a legal and ethical standpoint.

Last year, Algorithmic Justice League founder Joy Buolamwini gave a presentation during the World Economic Forum on the need to fight AI bias. Buolamwini highlighted the massive disparities in effectiveness when popular facial recognition algorithms were applied to various parts of society.

Synthesized claims its platform is able to automatically identify bias across data attributes like gender, age, race, religion, sexual orientation, and more.

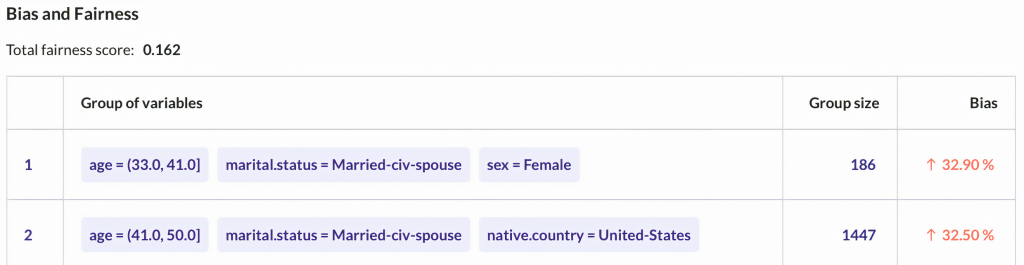

The platform was designed to be simple-to-use with no coding knowledge required. Users only have to upload a structured data file – as simple as a spreadsheet – to begin analysing for potential biases. A ‘Total Fairness Score’ will be provided to show what percentage of the provided dataset contained biases.

“Synthesized’s Community Edition for Bias Mitigation is one of the first offerings specifically created to understand, investigate, and root out bias in data,” explains Baldin. “We designed the platform to be very accessible, easy-to-use, and highly scalable, as organisations have data stored across a huge range of databases and data silos.”

Some examples of how Synthesized’s tool could be used across industries include:

- In finance, to create fairer credit ratings

- In insurance, for more equitable claims

- In HR, to eliminate biases in hiring processes

- In universities, for ensuring fairness in admission decisions

Synthesized’s platform uses a proprietary algorithm which is said to be quicker and more accurate than existing techniques for removing biases in datasets. A new synthetic dataset is created which, in theory, should be free of biases.

“With the generation of synthetic data, Synthesized’s platform gives its users the ability to equally distribute all attributes within a dataset to remove bias and rebalance the dataset completely,” the company says.

“Users can also manually change singular data attributes within a dataset, such as gender, providing granular control of the rebalancing process.”

If only MIT used such a tool on its dataset it was forced to remove in July after being found to be racist and misogynistic.

You can find out more about Synthesized’s tool and how to get started here.

(Photo by Agence Olloweb on Unsplash)