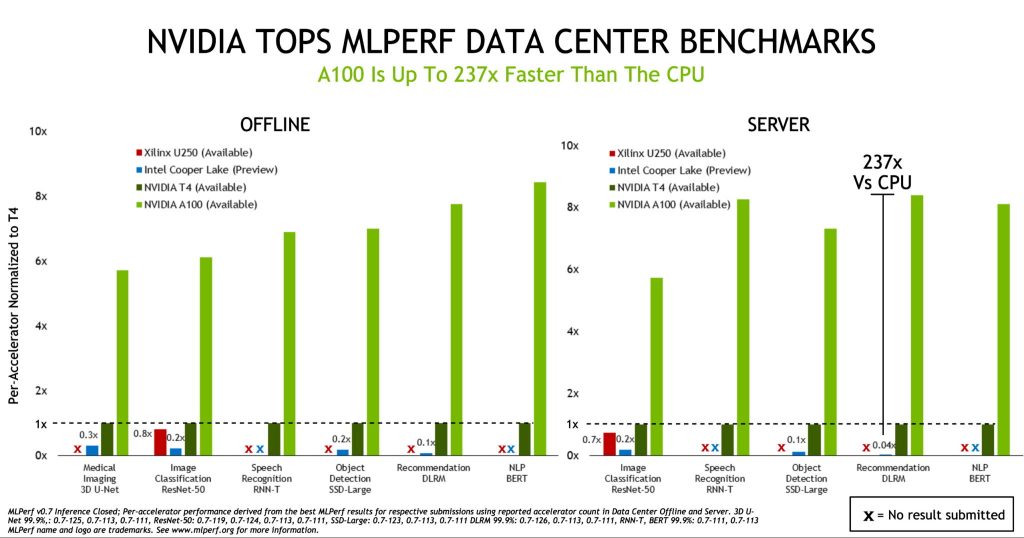

NVIDIA has set yet another record for AI inference in MLPerf with its A100 Tensor Core GPUs.

MLPerf consists of five inference benchmarks which cover the main three AI applications today: image classification, object detection, and translation.

“Industry-standard MLPerf benchmarks provide relevant performance data on widely used AI networks and help make informed AI platform buying decisions,” said Rangan Majumder, VP of Search and AI at Microsoft.

Last year, NVIDIA led all five benchmarks for both server and offline data centre scenarios with its Turing GPUs. A dozen companies participated.

23 companies participated in this year’s MLPerf but NVIDIA maintained its lead with the A100 outperforming CPUs by up to 237x in data centre inference.

For perspective, NVIDIA notes that a single NVIDIA DGX A100 system – with eight A100 GPUs – provides the same performance as nearly 1,000 dual-socket CPU servers on some AI applications.

“We’re at a tipping point as every industry seeks better ways to apply AI to offer new services and grow their business,” said Ian Buck, Vice President of Accelerated Computing at NVIDIA.

“The work we’ve done to achieve these results on MLPerf gives companies a new level of AI performance to improve our everyday lives.”

The widespread availability of NVIDIA’s AI platform through every major cloud and data centre infrastructure provider is unlocking huge potential for companies across various industries to improve their operations.

“NVIDIA sets another AI inference record in MLPerf”